In the past, reinforcement learning models have required the programmer to define the features to define a state space explicitly. Recent breakthroughs in deep reinforcement learning, however, have obviated the need for precisely defining a feature vector. This allows the models to learn feature representations optimal to the environment. This automated approach often results in better features than would be found in explicit feature representations.

Using basic raw pixel stream as input, an agent was successfully trained to learn control policies in an Atari game environment. The fundamental model is a convolutional neural network trained with a variation of Q-learning. The Atari game environment for running simulations and training the agent is created using the OpenAI Gym platform.

Motivation

The end objective of this project was to train a neural network to play the Atari standard Arcade Games using only the visual frame buffer as an input to our model. Hence, the agent would learn to play broadly in the same way as humans, with the same visual cues and automatically identify which actions have positive/negative impacts.

Procedure

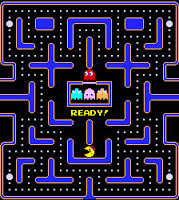

The simulations used in this project were an implementation of the famous Atari Games series on the virtual environment based on the open-sourced environment called Gym by OpenAI. The environment by OpenAI makes it easy for researchers to test their algorithms in environments without having to go through the hassle of creating one themselves. For this project, Ms. Pacman was used and two convolutional layers were constructed along a fully connected neural network classifier to predict actions based on the current state space.

Observations and Results

The behavior of the agent was analyzed during and after training as it learned to navigate the environment and avoid obstacles to achieve maximum reward. There have been certain instances where the agent shows peculiar behavior, especially when it got stuck at local minima for the loss function. One reason for this could be that even though the agent is rewarded a score of 0.0 in such situations, the frequency of ghost attacks in corners is less than that of agent being near the center of the environment.

Another interesting behavior from the agent is seen when it attains sudden high scores during training. This is because the agent learns that colliding with the ghosts, when it gets a special block of collectible point, can destroy the ghost and fetch extra points. This property of the environment was discovered by the agent due to the random exploration feature embedded in the algorithm by the parameter.